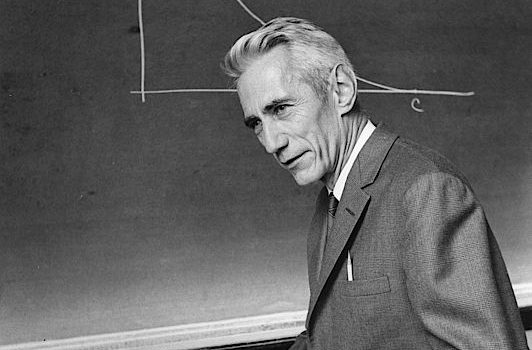

Claude Shannon’s source coding theorem, also known as the entropy encoding theorem, states that the average number of bits per symbol required to losslessly encode a source of information is equal to the entropy of the source. In other words, the entropy of a source represents the minimum average number of bits per symbol that is needed to represent the information in the source, and this minimum value is called the entropy rate of the source. The theorem provides a theoretical limit for lossless compression of data and has applications in information theory, communication systems, and data compression.

The entropy of a source represents the average amount of uncertainty or randomness in the source. In information theory, the entropy is measured in terms of the number of bits needed to represent the information in the source. The entropy of a source is defined as the minimum average number of bits per symbol required to represent the source’s information.

The source coding theorem states that the entropy of a source is equal to the minimum average number of bits per symbol required to represent the source’s information. This minimum value is referred to as the entropy rate of the source. The entropy rate provides a theoretical limit on the minimum amount of bits per symbol required to represent the information in the source. The theorem states that no matter how efficient the encoding method is, it cannot produce an average number of bits per symbol that is less than the entropy of the source.

The source coding theorem has important applications in information theory, communication systems, and data compression. In communication systems, it is used to determine the minimum bandwidth required to transmit data. In data compression, it is used to determine the theoretical minimum size of a compressed file and to evaluate the effectiveness of different compression algorithms.

Overall, the source coding theorem provides a fundamental theoretical limit for lossless compression of data and has far-reaching implications for the efficient transmission and storage of information.